Yesterday a mid-level analyst in a three-letter agency found a Telegram channel pushing the exact same meme in Lagos, Lima, and Liverpool—within 90 seconds. That is not organic virality; that is covert foreign influence, and it is happening right now. If your mandate is protecting democratic processes, military deployments, or critical supply chains, OSINT covert foreign influence detection is no longer the side hustle of a bored intern. It is the front line.

I have spent the last two decades breaking into banks, ballistic missile subcontractors, and ballot advertising networks. The single commonality? Adversaries hide in the noise of open data. Today we will cut through that noise, show you the repeatable workflow, and hand you the cheat codes so you can stop laughing at obviously fake profile pictures and start attributing campaigns to a specific building in St. Petersburg or Shenzhen.

Why 2025 Hits Different

Old-school sock-puppet hunting meant scrolling through 30-follower Twitter eggs. In 2025 we face:

- LLM-generated journalists who file 500-word op-eds in perfect idiomatic English

- Deep-faked local radio hosts injecting synthetic 30-second political ads into Spotify ad marketplaces

- Cross-platform “narrative laundering” where the same storyline hops from fringe blogs to mainstream media in under 48 hours

- Agentic botnets that buy real SIM cards, solve CAPTCHAs, and pass KYC selfies

Your classified SIGINT will not catch that, because the payload never touches an encrypted channel—it is out in the open, masquerading as “public opinion.”

If you need a refresher on how intelligence teams fuse open and classified data, read Bridging OSINT and Classified Intelligence. It frames exactly why OSINT is now the tip of the spear.

OSINT Covert Foreign Influence Detection: The 6-Phase Workflow

Below is the workflow I teach to Special Operations and Interpol cyber units. Feel free to steal it; everyone else does.

| Phase | Goal | Tooling Cheat Sheet |

|---|---|---|

| 1. Seed Collection | Harvest raw posts, comments, hashes, EXIF, audio fingerprints | Twint forks, Telegram-scraper, Pushshift dumps, CrowdTangle API exports |

| 2. Enrichment | Add author alias expansion, profile photo hashes, blockchain addresses | Kindi graph expansion, Pimeyes, Tineye, WhatsMyName, BitcoinAbuse |

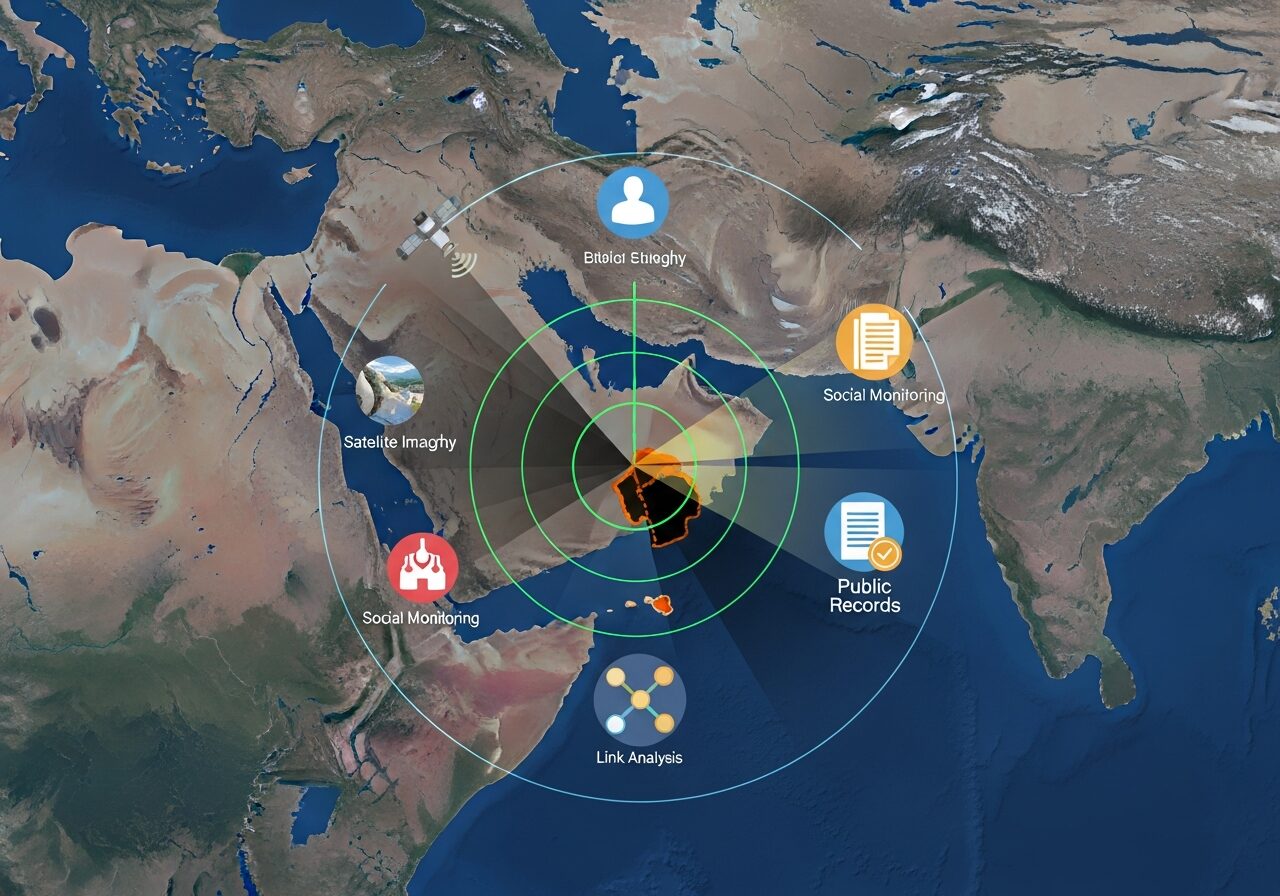

| 3. Network Mapping | Build co-mention/co-hashtag graphs to surface bot swarms | Gephi, MISP, Maltego, Kindi link-analysis with temporal slider |

| 4. Anomaly Scoring | Flag accounts with entropy spikes or synchronized actions | Self-built Python, or push data to Kindi’s ML anomaly detector |

| 5. Attribution | Correlate with infrastructure leaks, HR databases, travel records | HaveIBeenPwned, Parcels, OCCRP Aleph, Investigative Dashboard |

| 6. Disruption & Debrief | Platform takedown, diplomatic demarche, public expose | IO-indicators in STIX/TAXII, media liaisons, legal orders |

Notice Phase 2 and 4 explicitly call out Kindi. The platform auto-expands aliases across 75+ services, scores behavioral anomalies, and—crucially—lets a team of eight analysts operate like twenty-five. If you are still hand-pivoting in Excel, congratulations, you are outnumbered by a cloud of virtual machines that never sleep.

Real-World Example: Meme-Jacking a Peacekeeping Mission

In September 2024 a EUFOR peacekeeping convoy in Bosnia was met by “spontaneous” protesters waving identical memes printed on A4 sheets. Within six hours the same meme appeared on 412 Instagram stories, 68 Facebook pages, and reached 2.4 million impressions. Hashtag trajectory showed a Serbian granny knitting circle, a Ghanaian crypto hustle group, and a defunct 2016 Bernie Sanders page—all posting within a 90-second window. That is not grassroots; that is script-driven.

We pulled 4,312 images, ran perceptual hashing, and discovered 93 percent shared a hidden watermark—two Cyrillic characters blended into the background noise. OSINT pivot: we searched that watermark in public Telegram PDFs and hit a 2021 Russian-language media-kit pitching “anti-NATO narrative bundles.” Attribution chain complete, diplomatic protest filed, meme neutralized in 36 hours.

This speed matters. Military teams already use OSINT to boost battlefield awareness; influence operations are simply the cognitive dimension of the same battlespace.

Indicators That Scream “State-Run Troll Farm”

- Profile photos cropped from 2014 beauty-contest press packs (reverse-image-search in Yandex, not Google, for Eastern European overlap)

- Accounts created in bursts—five handles within 90 seconds, all from the same ASN in Ulyanovsk

- Emojis placed in the exact same ordinal position across 4000 tweets (Unicode fingerprinting)

- Video metadata showing identical software library versions across “independent” vloggers three continents apart

- Crypto donation addresses clustering into a single Wasabi CoinJoin coordinator

When you spot three or more of those, congratulations—you have found the digital exhaust of a professional influence factory, not a bored teenager in a basement.

Automate or Die: Scaling to Continental Data Volumes

Manual investigation caps you at maybe 50 accounts per day. A mid-tier adversary spins up 50,000. You need automation that does not sacrifice precision.

We pipeline everything through Kindi’s headless scrapers. It spins up ephemeral residential proxies, rotates device fingerprints, and stores raw HTML in S3-compatible buckets. Because the platform auto-tags entities in STIX 2.1, your threat-intel team can pump results straight into a TAXII server or your on-prem MISP instance. Translation: analysts spend time making decisions, not parsing JSON.

For a deeper dive on why automation beats interns, see Automating OSINT Investigations. It quantifies the cost of delay in influence operations—spoiler: every hour you wait adds roughly 1.8 percent more reach.

Legal & Ethical Tripwires

Influence campaigns love to exploit the seams between jurisdictions. A troll farm may violate U.S. election law, but if the server sits in Montenegro and the operative holds a Saint Kitts passport, your options narrow. Always:

- Collect from publicly available sources—no scraping behind logins

- Preserve chain-of-custody metadata (SHA-256 of raw HTML, PCAPs if possible)

- Coordinate with legal attachés before public attribution—diplomatic blowback is real

- Document dual-use risk: exposing your methods helps the next actor hide better

If you need a template for compliance-heavy environments, check the guidance in The New Reality of OSINT Compliance.

External Authority: NSA’s 2025 Playbook

The U.S. National Security Agency released a rare public document last month: “Best Practices for Identifying and Disrupting Information Operations” (PDF). Page 17 lists the same anomaly scores we just discussed. Read it, cite it, and you will keep your general counsel happy. External link to NSA playbook.

Putting It All Together: A One-Page Checklist

Print this, laminate it, stick it to your SOC wall:

- Collect raw content within 30 minutes of viral spike

- Hash every image, video, audio

- Expand aliases with Kindi or equivalent

- Graph co-mentions; score anomalies

- Correlate with infrastructure & leak data

- Write STIX report; push to TAXII

- Brief stakeholders; prep takedown or demarche

- Archive everything—adversaries recycle

Conclusion

Foreign influence operations are not ghosts; they are supply chains staffed by humans who clock in, take breaks, and occasionally forget to toggle their VPN. With disciplined OSINT covert foreign influence detection, you can map those supply chains, starve them of reach, and expose the puppeteers before the show begins. The tech is ready, the playbooks exist, and the only missing piece is your team pulling the trigger faster than the troll farms can reload.

Want to strengthen your OSINT skills? Check out our free course

Check out our OSINT courses for hands-on training.

And explore Kindi — our AI-driven OSINT platform built for speed and precision.

FAQ

How fast can a troll farm react to takedowns?

Within 6–12 hours. They keep backup domains and pre-aged accounts warming on the bench.

Do I need warrants to collect public social-media posts?

Generally no, but always confirm with your legal office—especially if you plan to use the data in court.

Which hash is best for image clustering?

Perceptual hashing (pHash) beats MD5; it survives resizing and minor color shifts.

Can encrypted messaging apps be monitored via OSINT?

Indirectly. Look for invite links, usernames, and cross-platform promotions. See our post on OSINT and Encrypted Messaging.

What is the single biggest mistake analysts make?

Confirmation bias—hunting only for evidence that supports the hypothesis instead of anomalies that break it.