Security Operations Centers face an unprecedented challenge today. Threat actors exploit visibility gaps faster than organizations can identify them. Meanwhile, log retention costs spiral while investigation capabilities suffer from poor data management practices.

The reality is stark for SOC analysts and triage specialists. Without comprehensive visibility, organizations operate blindly against sophisticated adversaries. Furthermore, inadequate log retention creates forensic dead zones during critical investigations. However, implementing effective SOC visibility best practices transforms these challenges into competitive advantages.

Modern SOCs must balance three critical elements: comprehensive telemetry coverage, intelligent log retention, and cost-effective storage strategies. This balance determines whether security teams detect threats quickly or remain vulnerable to undetected breaches.

The SOC Visibility Triad: Foundation for Effective Security Monitoring

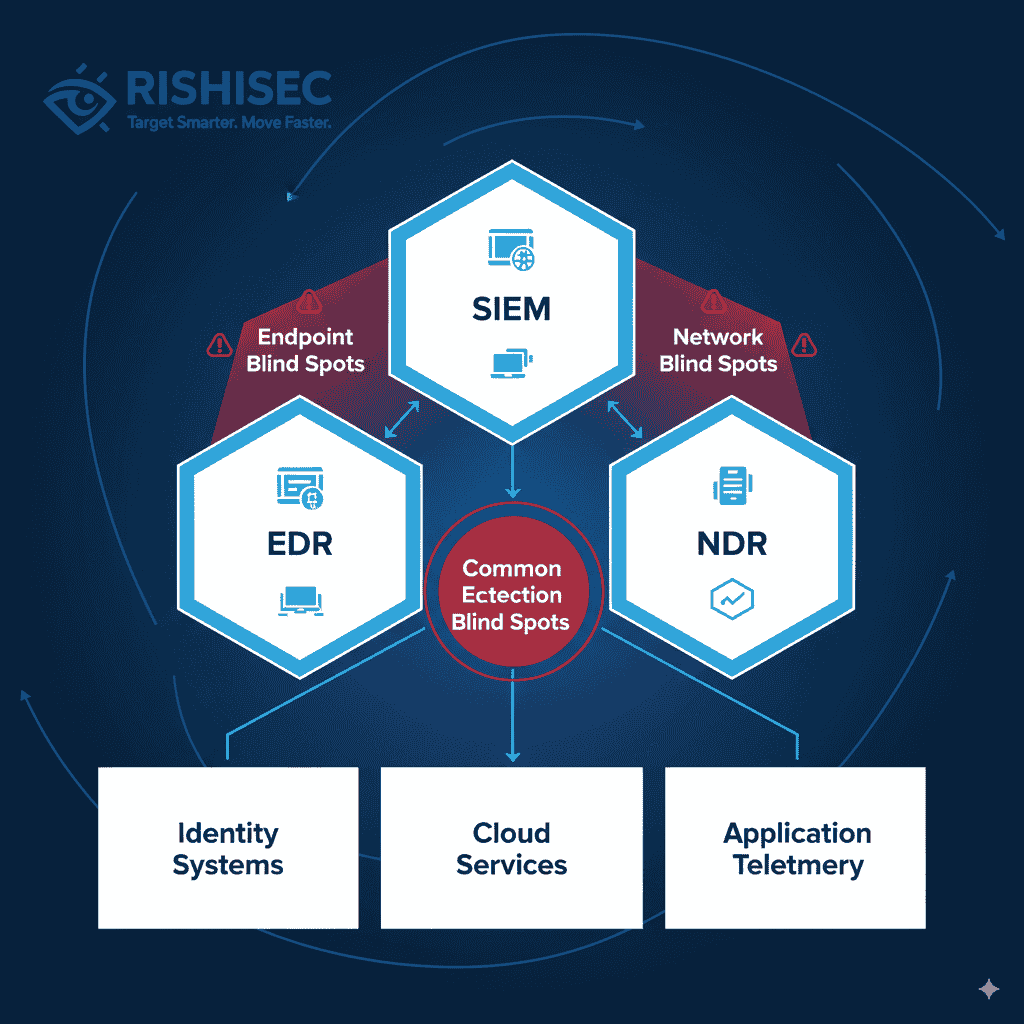

Effective SOC operations rely on the visibility triad: SIEM systems for centralized logging, EDR solutions for endpoint monitoring, and NDR platforms for network analysis. However, traditional implementations often miss critical blind spots that adversaries exploit.

Most organizations struggle with identity and cloud telemetry gaps. These blind spots include missing privilege escalation events, insufficient identity provider logging, and unmonitored cloud resource sprawl. Additionally, configuration drift and shadow services create additional vulnerabilities.

According to recent industry research, organizations with comprehensive visibility detect threats 200 days faster than those with coverage gaps. Moreover, complete telemetry coverage reduces false positive rates by up to 60%, enabling analysts to focus on genuine threats.

The impact on SOC workflows is significant. Visibility gaps increase mean time to detection and response because threat paths remain unclear. Furthermore, incomplete telemetry creates alert fatigue through numerous low-context notifications that require extensive manual investigation.

| Visibility Component | Coverage Area | Common Blind Spots | Impact on Detection |

|---|---|---|---|

| SIEM Logging | Centralized log analysis | Cloud service logs, application logs | Missed lateral movement |

| EDR Monitoring | Endpoint activities | Mobile devices, IoT endpoints | Undetected malware persistence |

| NDR Analysis | Network traffic flows | Encrypted traffic, east-west flows | Hidden command and control |

| Identity Telemetry | Authentication events | Privilege escalation, federated access | Credential theft blindness |

Identifying Critical Blind Spots in Your Security Architecture

Identity telemetry represents the most significant blind spot for many organizations. Missing privilege escalation events allow attackers to gain administrative access undetected. Similarly, insufficient identity provider logging creates gaps in user activity tracking.

Cloud environments present unique visibility challenges. Unmonitored asset sprawl creates orphaned resources that attackers exploit. Additionally, shadow services deployed without security oversight become attack vectors that bypass traditional monitoring.

Log streaming delays compound these problems. Inconsistent or low-granularity metadata prevents accurate threat correlation. Consequently, security teams struggle to reconstruct attack timelines during incident response efforts.

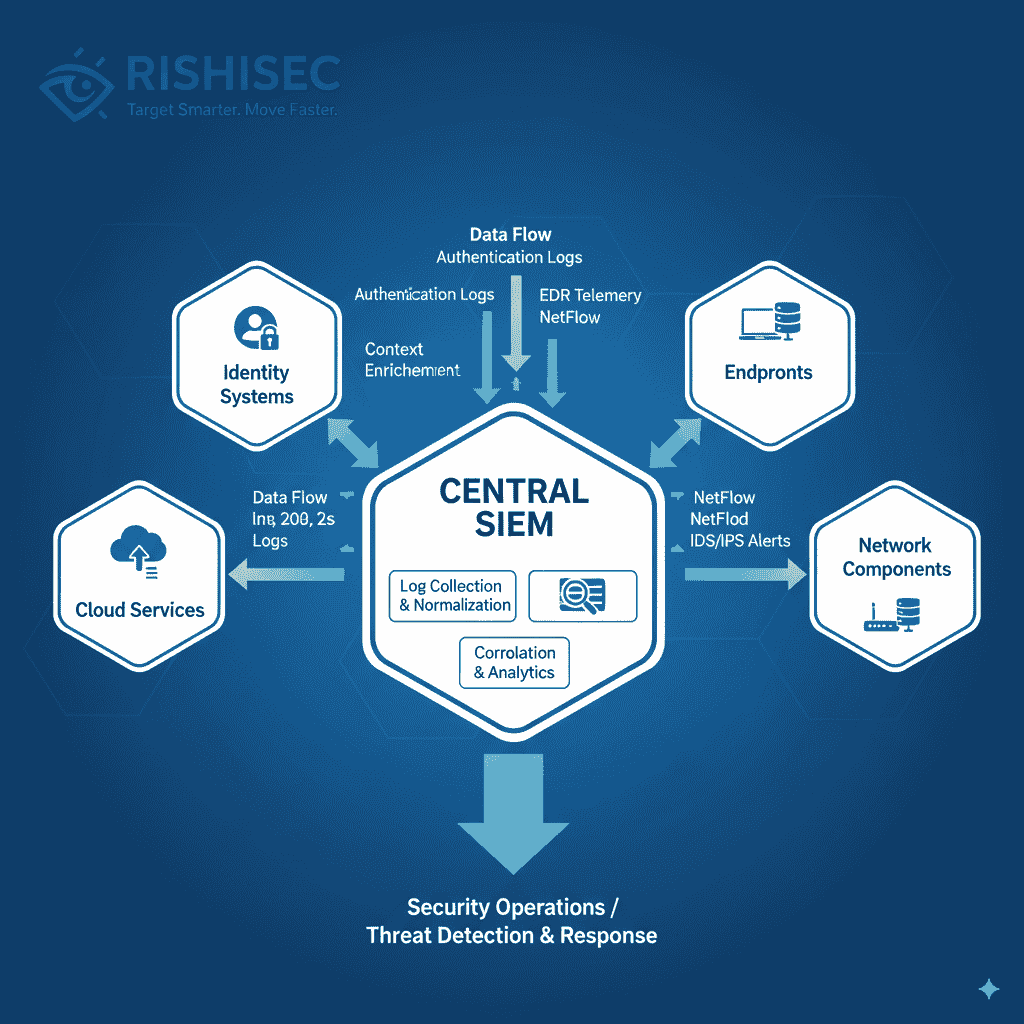

Implementing Effective SOC Visibility Best Practices

Successful visibility implementation starts with comprehensive telemetry source inventory. Organizations must map current coverage against ideal monitoring requirements. This assessment reveals gaps where adversaries might operate undetected.

Identity log centralization becomes critical for modern SOCs. Real-time streaming of identity provider logs, access control events, and privilege changes enables rapid detection of credential-based attacks. Furthermore, context enrichment linking identity events with network and endpoint data accelerates triage processes.

Cloud telemetry integration requires leveraging native provider tools alongside custom monitoring solutions. AWS CloudTrail, Azure Activity Logs, and Google Cloud Audit Logs provide essential visibility into cloud operations. However, supplementing native telemetry with agent-based monitoring ensures comprehensive coverage.

Prioritization strategies help manage visibility complexity. High-risk assets and users require enhanced monitoring coverage. Similarly, critical cloud resources need additional telemetry depth. This approach balances comprehensive coverage with operational efficiency.

However, organizations must consider implementation trade-offs. Increased telemetry volume impacts bandwidth and storage costs. Additionally, privacy compliance requirements affect identity log handling. Therefore, integrating external intelligence sources helps SOCs prioritize which telemetry sources provide maximum security value.

Addressing Cloud and Identity Telemetry Gaps

Cloud visibility requires continuous monitoring of configuration changes, resource deployments, and access patterns. Organizations should implement automated discovery processes that identify new cloud assets immediately. Furthermore, configuration drift detection prevents security gaps from developing over time.

Identity monitoring extends beyond traditional authentication logs. Modern SOCs need visibility into privilege escalation attempts, unusual access patterns, and federated authentication flows. Additionally, service account monitoring prevents attackers from abusing automated credentials.

Integration challenges require careful planning. Different telemetry sources use various formats and transmission methods. Therefore, standardization efforts ensure consistent data quality across all monitoring sources.

Log Retention Strategies for Cost-Effective Security Operations

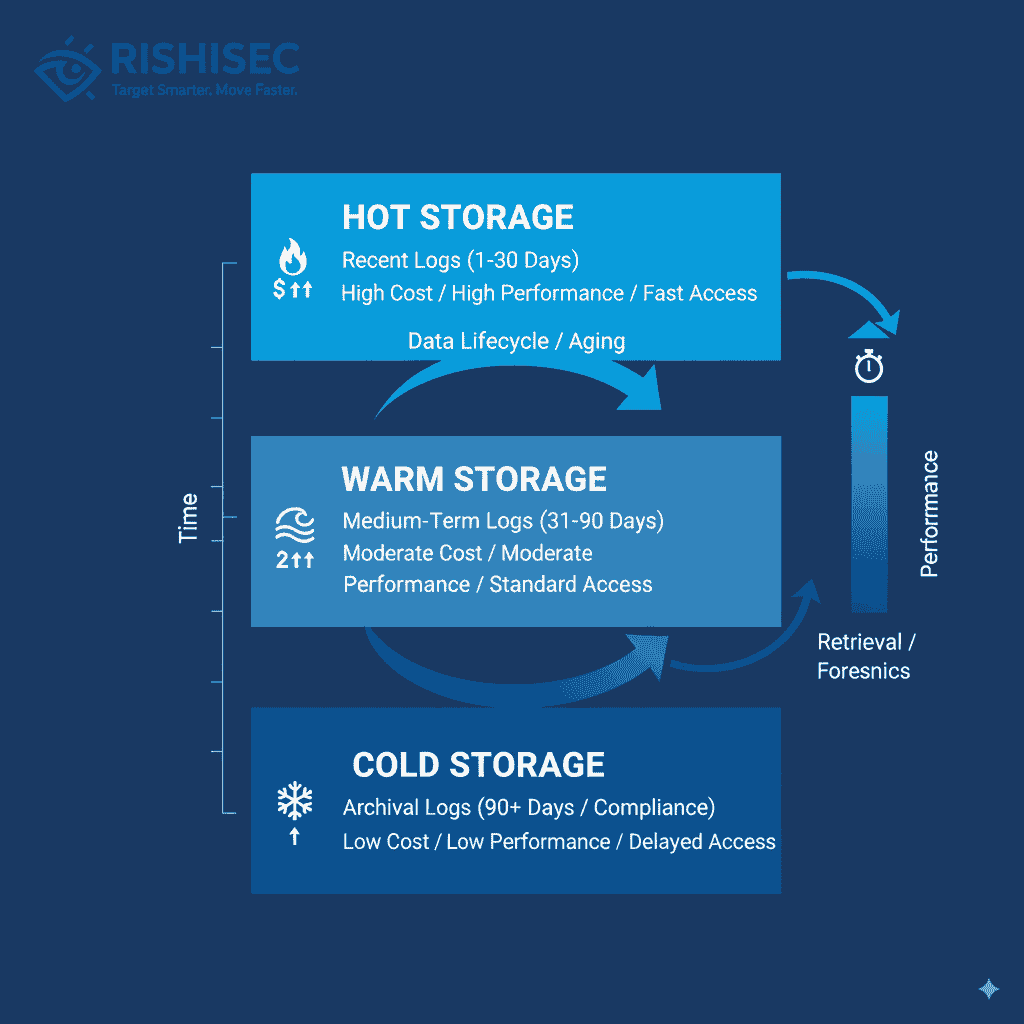

Long-term log retention balances investigative capabilities with storage costs. Regulatory compliance requirements often mandate specific retention periods, but organizations need strategies that support both compliance and operational needs.

Tiered storage architectures provide cost-effective solutions for extended retention. Recent logs remain in high-performance storage for active monitoring. Meanwhile, older logs move to cold storage tiers that reduce costs while maintaining accessibility for investigations.

Classification strategies determine which logs require extended retention. Authentication logs, configuration changes, and security events typically need longer storage periods. Conversely, routine application logs might use shorter retention windows to manage costs.

Automation becomes essential for retention management. Automated workflows handle log archival, compression, and deletion based on predefined policies. This approach ensures consistent retention practices while reducing administrative overhead.

Moreover, organizations must plan for exceptional retrieval scenarios. Legal holds and forensic investigations require rapid access to archived logs. Therefore, metadata indexing and search capabilities remain crucial even for cold storage implementations.

Balancing Retention Duration with Investigation Requirements

Retention periods should align with typical investigation timelines and threat actor behavior patterns. Advanced persistent threats often maintain long-term access, requiring extended log retention for complete timeline reconstruction.

Risk tolerance affects retention decisions. Organizations with higher risk profiles might extend retention periods to support thorough investigations. Conversely, lower-risk environments might optimize for cost efficiency with shorter retention windows.

Compression and deduplication technologies significantly reduce storage requirements. Modern platforms can achieve 80-90% compression ratios for text-based logs. Additionally, intelligent sampling reduces volume for less critical log types without losing essential security context.

Advanced Strategies for SOC Visibility Optimization

Context enrichment transforms raw telemetry into actionable intelligence. Linking logs across identity, network, and endpoint sources provides comprehensive attack path visibility. Furthermore, external threat intelligence integration adds context that helps analysts distinguish genuine threats from benign activities.

Machine learning applications enhance visibility effectiveness. Behavioral analysis identifies unusual patterns that indicate potential threats. Additionally, automated correlation reduces manual analysis workload while improving detection accuracy.

Platforms like Kindi’s AI-powered OSINT automation and link analysis platform demonstrate how intelligent correlation can accelerate security investigations. By automatically connecting seemingly disparate data points, these tools help SOC analysts identify threat relationships that manual analysis might miss.

Performance optimization becomes critical as telemetry volume increases. Efficient indexing strategies enable rapid searches across large log volumes. Similarly, query optimization reduces resource consumption while maintaining investigation speed.

Integration with threat intelligence platforms enhances visibility value by adding external context to internal telemetry. This integration helps analysts understand whether detected activities align with known threat actor patterns or represent novel attack methods.

Implementation Roadmap for Enhanced SOC Visibility

Short-term improvements focus on immediate visibility gaps. Organizations should audit current telemetry sources and identify critical missing coverage areas. Additionally, implementing automated log collection from identity providers and cloud services addresses the most common blind spots.

Medium-term initiatives involve centralization and context enrichment. Establishing unified log management platforms enables comprehensive correlation across all telemetry sources. Furthermore, implementing automated retention policies ensures consistent data management practices.

Long-term optimization incorporates advanced analytics and continuous improvement processes. Machine learning integration automates routine analysis tasks while highlighting anomalies that require human investigation. Moreover, regular assessments ensure visibility strategies evolve with changing threat landscapes.

- Conduct comprehensive telemetry source inventory to identify coverage gaps

- Implement real-time identity and cloud log streaming to central platforms

- Establish tiered storage architectures for cost-effective long-term retention

- Deploy context enrichment capabilities linking multiple telemetry sources

- Create automated retention policies aligned with compliance requirements

- Integrate threat intelligence feeds to enhance detection accuracy

- Develop performance optimization strategies for large-scale log analysis

- Establish continuous assessment processes for visibility effectiveness

Measuring Success and Continuous Improvement

Effective measurement requires establishing baseline metrics for visibility coverage and investigation efficiency. Key performance indicators include mean time to detection, false positive rates, and investigation completion times. Additionally, compliance audit results provide external validation of retention strategies.

Regular assessment identifies emerging blind spots as organizational infrastructure evolves. New applications, cloud services, and identity systems create additional telemetry requirements. Therefore, continuous monitoring ensures visibility strategies remain comprehensive.

Cost optimization metrics balance security effectiveness with budget constraints. Organizations should track cost per gigabyte for different storage tiers and retrieval frequencies for archived logs. This data supports informed decisions about retention policies and storage architectures.

Furthermore, feedback from incident response activities reveals visibility gaps that impact real investigations. Post-incident reviews should specifically examine whether available telemetry supported complete timeline reconstruction and root cause analysis.

Success ultimately depends on whether enhanced visibility translates into improved security outcomes. Organizations should measure reduction in successful attacks, faster threat containment, and improved compliance posture as indicators of effective SOC visibility best practices implementation.

Conclusion: Building Resilient SOC Operations Through Enhanced Visibility

Comprehensive SOC visibility requires balancing multiple competing priorities: coverage breadth, cost management, compliance requirements, and operational efficiency. However, organizations that implement effective SOC visibility best practices gain significant advantages in threat detection and incident response capabilities.

Success demands proactive planning rather than reactive gap-filling. Organizations must assess current visibility posture, identify critical blind spots, and implement systematic improvements across identity, cloud, and traditional monitoring domains.

The investment in enhanced visibility pays dividends through reduced breach impact, faster incident response, and improved compliance posture. Moreover, comprehensive telemetry enables advanced analytics that transform security operations from reactive to predictive.

Modern SOC operations require modern visibility strategies. Organizations that embrace comprehensive telemetry coverage, intelligent retention policies, and advanced correlation capabilities will outperform those that rely on traditional, fragmented approaches.

Want to strengthen your OSINT skills and enhance your SOC visibility capabilities? Check out our OSINT courses for practical, hands-on training. Additionally, discover how Kindi can transform your security investigations through intelligent automation and comprehensive link analysis.

FAQ

What are the most critical blind spots in modern SOC visibility?

The most critical blind spots include identity telemetry gaps (privilege escalation events, federated authentication), cloud service monitoring (shadow resources, configuration drift), and encrypted network traffic analysis. Additionally, many organizations lack comprehensive mobile device and IoT endpoint visibility, creating attack vectors that bypass traditional monitoring systems.

How long should SOCs retain different types of security logs?

Retention periods vary by log type and compliance requirements. Authentication and security event logs typically require 1-2 years retention, while configuration change logs might need 3-5 years. Network flow logs can often use shorter periods (90-180 days) due to volume, while audit logs for regulated industries may require 7+ years retention.

What is the most cost-effective approach to long-term log retention?

Tiered storage architectures provide the most cost-effective solution. Keep recent logs (30-90 days) in high-performance storage for active analysis, move older logs to warm storage for occasional access, and archive long-term retention logs in cold storage. Implement compression, deduplication, and intelligent sampling to further reduce costs while maintaining investigative value.

How can SOCs prioritize which telemetry sources to implement first?

Prioritize based on risk assessment and attack path analysis. Start with identity provider logs and cloud service telemetry, as these represent the most common blind spots. Next, focus on critical asset monitoring and high-privilege user activities. Finally, extend coverage to comprehensive network flows and application-specific telemetry based on your organization’s specific threat model.

What role does automation play in SOC visibility best practices?

Automation is essential for managing modern SOC visibility at scale. Key automation areas include log collection and forwarding, retention policy enforcement, correlation rule management, and alert triage. Additionally, automated discovery processes identify new assets requiring monitoring, while machine learning enhances threat detection accuracy and reduces false positive rates.