Traditional honeypots have long served as digital decoys in cybersecurity arsenals, luring attackers into controlled environments to study their tactics. But in 2025, a revolutionary shift is underway. Large Language Models (LLMs) are transforming static honeypots into dynamic, intelligent deception systems that can engage attackers in realistic conversations, adapt to their behavior in real-time, and extract unprecedented threat intelligence.

For Security Operations Centers (SOC) drowning in alerts and struggling to identify sophisticated threats, AI-powered honeypots represent a game-changing advancement. These systems don’t just trap attackers—they learn from them, building comprehensive threat profiles that enhance your entire security posture. If you’re responsible for threat intelligence, incident response, or SOC operations, understanding this emerging technology isn’t optional—it’s essential.

What Makes LLM-Enhanced Honeypots Different?

Beyond Static Decoys

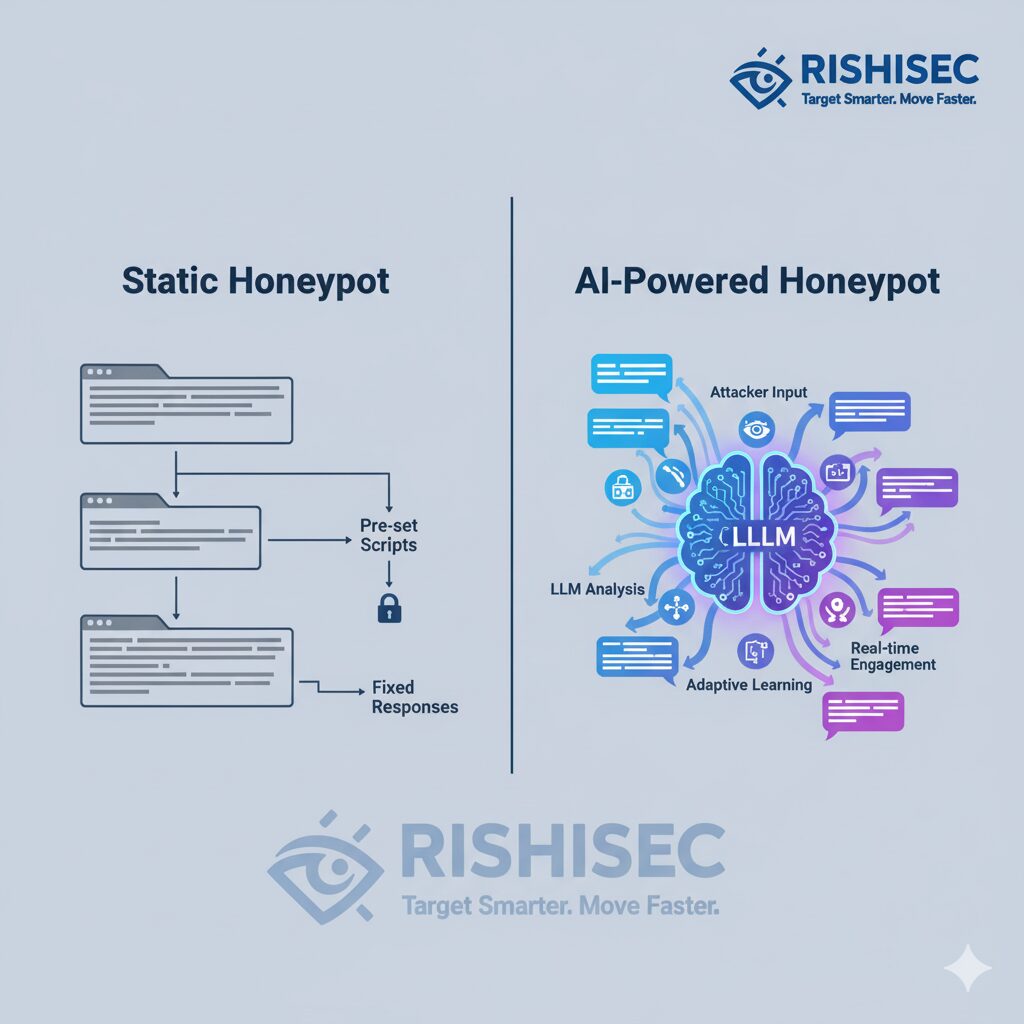

Traditional honeypots operate on predetermined scripts and responses. An attacker probing a conventional honeypot quickly recognizes patterns, limited interactions, and artificial constraints. Once detected, the honeypot loses its value, and the attacker moves on.

LLM-enhanced honeypots fundamentally change this dynamic by:

- Generating contextually appropriate responses that mirror real system behavior

- Adapting conversation flow based on attacker queries and actions

- Maintaining engagement longer through realistic, unpredictable interactions

- Learning from each interaction to improve future deception effectiveness

- Creating believable personas for social engineering scenarios

This isn’t just an incremental improvement—it’s a paradigm shift in how deception technology operates. When integrated with platforms like Kindi, these AI-powered systems can correlate honeypot intelligence with broader OSINT data, creating a comprehensive threat landscape view.

The Intelligence Goldmine

Every interaction with an LLM honeypot generates valuable threat intelligence:

Tactical Intelligence:

- Attack vectors and entry points attempted

- Tools and frameworks utilized

- Command sequences and syntax preferences

- Exploitation techniques and methodologies

Behavioral Intelligence:

- Decision-making patterns during reconnaissance

- Persistence mechanisms deployed

- Lateral movement strategies

- Data exfiltration methods

Strategic Intelligence:

- Campaign objectives and target selection criteria

- Attacker skill levels and sophistication

- Potential attribution indicators

- Emerging threat trends and techniques

This multi-layered intelligence feeds directly into your threat intelligence operations, enabling proactive defense strategies rather than reactive incident response.

Implementing AI-Powered Honeypots in Your SOC

Architecture and Integration

Successful deployment requires careful planning and integration with existing security infrastructure:

1. Network Positioning

Place LLM honeypots strategically across your network topology:

- Perimeter zones to catch external reconnaissance

- Internal segments to detect lateral movement

- High-value asset areas to protect critical systems

- Cloud environments to monitor multi-cloud threats

2. Data Pipeline Integration

Connect honeypot intelligence to your security stack:

- SIEM platforms for correlation and alerting

- Threat intelligence platforms for enrichment

- SOAR systems for automated response workflows

- OSINT tools for external context

3. Response Automation

Configure automated actions based on honeypot triggers:

- Immediate isolation of compromised systems

- Dynamic firewall rule updates

- Threat actor IP blocking across infrastructure

- Alert escalation to incident response teams

Customization for Maximum Effectiveness

Generic honeypots are easily identified. Customization is critical:

Environment Mimicry:

- Mirror your actual technology stack and configurations

- Replicate realistic file structures and naming conventions

- Include believable but fake sensitive data

- Maintain consistent system response times and behaviors

Persona Development:

- Create authentic user profiles with realistic activity patterns

- Generate believable email communications and documents

- Establish credible access levels and permissions

- Develop consistent backstories for social engineering scenarios

Adaptive Learning:

- Continuously update LLM models based on attacker interactions

- Incorporate new attack techniques into response libraries

- Refine deception strategies based on effectiveness metrics

- Share intelligence across honeypot deployments

Real-World Applications and Use Cases

Advanced Persistent Threat (APT) Detection

LLM honeypots excel at identifying sophisticated, patient attackers who evade traditional detection:

A financial services SOC deployed AI-powered honeypots mimicking executive workstations. Over three months, the system engaged with an APT group conducting reconnaissance. The LLM maintained realistic conversations about “confidential merger documents,” keeping attackers engaged while collecting:

- Custom malware samples

- Command and control infrastructure details

- Attack timeline and operational patterns

- Potential attribution indicators linking to known threat groups

This intelligence enabled the organization to harden defenses before the actual attack phase began, preventing a potentially catastrophic breach.

Insider Threat Identification

Internal threats pose unique challenges because insiders already have legitimate access. LLM honeypots can identify malicious insiders through:

- Unusual access patterns to decoy systems

- Attempts to exfiltrate fake sensitive data

- Reconnaissance of systems outside normal job functions

- Social engineering attempts against AI personas

When combined with behavioral analytics and OSINT, these systems create a comprehensive insider threat detection capability.

Ransomware Gang Profiling

Ransomware operators often conduct extensive reconnaissance before deployment. LLM honeypots can:

- Engage attackers in realistic system exploration

- Provide fake backup systems to study encryption techniques

- Capture ransom negotiation tactics and communication patterns

- Identify infrastructure used for command and control

This intelligence helps organizations understand ransomware gang operations, improving both prevention and response capabilities.

Overcoming Implementation Challenges

Resource Requirements

Computational Demands:

Running LLM models requires significant processing power. Consider:

- Cloud-based LLM services for scalability

- Edge deployment for latency-sensitive scenarios

- Hybrid approaches balancing cost and performance

- GPU acceleration for real-time response generation

Expertise Gaps:

Implementing AI-powered honeypots requires specialized skills:

- Machine learning and LLM fine-tuning expertise

- Threat intelligence analysis capabilities

- Network architecture and security engineering knowledge

- Continuous monitoring and optimization resources

Legal and Ethical Considerations

Deception technologies raise important questions:

Legal Compliance:

- Ensure honeypot activities comply with applicable laws

- Document authorization and oversight procedures

- Maintain clear boundaries between monitoring and entrapment

- Consult legal counsel on jurisdiction-specific requirements

Ethical Guidelines:

- Establish clear policies on data collection and retention

- Protect privacy of legitimate users who accidentally access honeypots

- Define acceptable engagement levels with attackers

- Create transparency frameworks for stakeholders

False Positive Management

Even sophisticated AI systems can generate false positives:

Mitigation Strategies:

- Implement multi-factor verification before escalation

- Correlate honeypot alerts with other security signals

- Establish confidence scoring for threat classifications

- Create human-in-the-loop review processes for critical alerts

Integration with Broader Threat Intelligence Programs

LLM honeypots shouldn’t operate in isolation. Maximum value comes from integration:

OSINT Correlation

Connect honeypot intelligence with open-source intelligence:

- Cross-reference attacker infrastructure with known malicious IPs

- Correlate tactics with published threat reports

- Identify connections to known threat actor groups

- Enrich internal intelligence with external context

Platforms like Kindi excel at this correlation, automatically enriching honeypot data with comprehensive OSINT intelligence to provide complete threat actor profiles.

Threat Hunting Enhancement

Use honeypot intelligence to drive proactive threat hunting:

- Develop hunt hypotheses based on observed attacker behaviors

- Search for similar tactics in production environments

- Identify potential compromises that evaded initial detection

- Validate security control effectiveness

Red Team Collaboration

Share honeypot insights with red team operations:

- Test deception effectiveness against internal security testing

- Identify gaps in honeypot coverage and realism

- Refine attacker engagement strategies

- Validate threat intelligence accuracy

The Future of AI-Powered Deception

As we move deeper into 2025 and beyond, expect rapid evolution:

Emerging Capabilities:

- Multi-modal deception incorporating voice and video

- Autonomous honeypot networks that coordinate responses

- Predictive models that anticipate attacker next moves

- Quantum-resistant deception for post-quantum threats

Industry Adoption:

- Standardization of LLM honeypot frameworks

- Shared threat intelligence from distributed honeypot networks

- Regulatory requirements for deception technology deployment

- Integration into managed security service offerings

Conclusion: Turning the Tables on Attackers

AI-powered honeypots with LLM technology represent more than just an evolution in deception—they’re a fundamental shift in the attacker-defender dynamic. By engaging adversaries in realistic, adaptive interactions, these systems extract invaluable intelligence while keeping threats away from production systems.

For SOC teams, threat intelligence analysts, and security leaders, the question isn’t whether to adopt this technology, but how quickly you can implement it effectively. The organizations that master AI-powered deception today will have significant advantages in tomorrow’s threat landscape.

Start by assessing your current honeypot capabilities, identifying gaps in threat intelligence collection, and exploring how LLM-enhanced deception can strengthen your security posture. The attackers are already using AI—it’s time to turn their own weapons against them.