Artificial intelligence has fundamentally transformed the cybersecurity landscape, creating an unprecedented paradox. While 73% of organizations now leverage AI in cybersecurity for enhanced threat detection capabilities, the same technology empowers adversaries to launch increasingly sophisticated attacks. This dual nature presents both extraordinary opportunities and significant challenges for security professionals navigating today’s threat environment.

As cybersecurity consultants, understanding this balance becomes crucial for developing comprehensive security strategies. The rise of AI-driven threat detection systems has revolutionized how we identify and respond to threats. However, cybercriminals are equally adept at weaponizing artificial intelligence to create polymorphic malware, deepfake social engineering attacks, and automated vulnerability exploitation tools.

AI as the Ultimate Cyber Defender

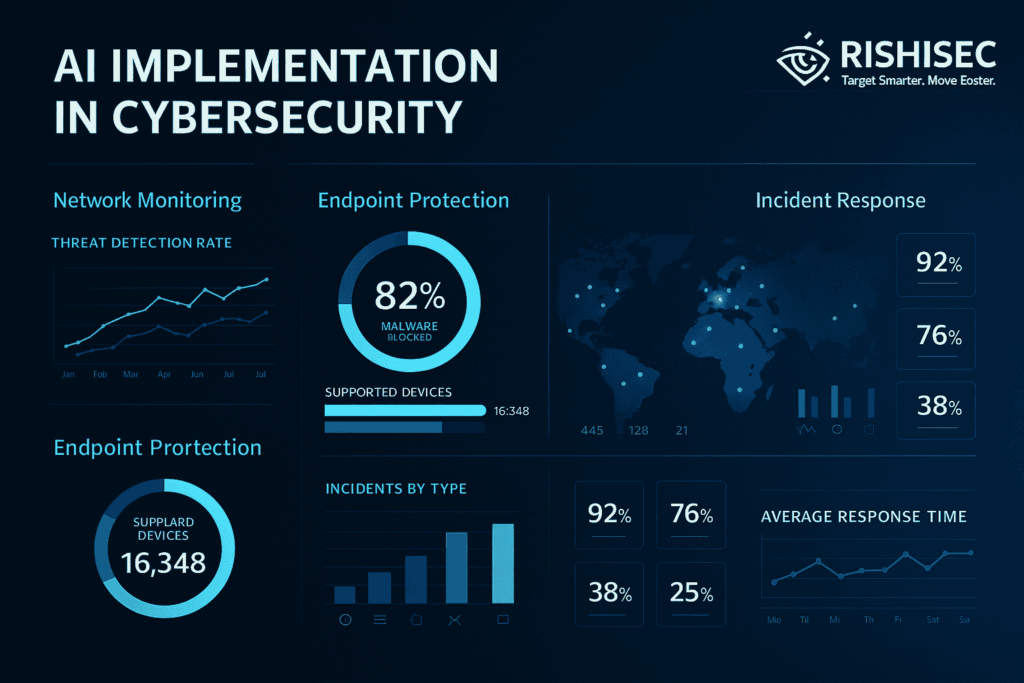

Modern AI-powered security systems have transformed traditional reactive approaches into proactive, intelligent defense mechanisms. Machine learning algorithms can analyze vast datasets in real-time, identifying patterns that would be impossible for human analysts to detect manually. These systems excel at behavioral analysis, anomaly detection, and threat hunting at scale.

Security Operations Centers (SOCs) worldwide are witnessing dramatic improvements in their operational efficiency through AI integration. Automated threat classification, incident prioritization, and response orchestration have reduced mean time to detection (MTTD) from hours to minutes. Furthermore, AI-driven systems can correlate seemingly unrelated events across multiple data sources, providing comprehensive threat intelligence that enhances overall security posture.

Advanced threat detection platforms now incorporate natural language processing to analyze dark web communications, social media threats, and phishing campaigns. This capability extends to OSINT investigations, where tools like Kindi leverage AI to automate link analysis and accelerate intelligence gathering processes, making collaboration between analysts significantly more efficient.

Key AI Defense Capabilities

- Behavioral Analytics: AI systems continuously monitor user and entity behavior, establishing baseline patterns and detecting deviations that may indicate compromise or insider threats.

- Predictive Threat Modeling: Machine learning algorithms analyze historical attack data to predict potential future threats and vulnerabilities before they are exploited.

- Automated Incident Response: AI-driven orchestration platforms can automatically contain threats, isolate affected systems, and initiate remediation procedures without human intervention.

- Continuous Vulnerability Assessment: AI systems can continuously scan and assess network infrastructure, applications, and configurations for potential security weaknesses.

The Dark Side: AI-Powered Adversarial Threats

While AI strengthens defensive capabilities, threat actors are equally innovative in weaponizing these technologies. Adversarial AI represents a significant evolution in cyber threats, enabling attackers to launch more sophisticated, adaptive, and scalable attacks than ever before.

Polymorphic malware powered by machine learning can now modify its code structure continuously, evading traditional signature-based detection systems. These adaptive threats learn from security responses, evolving their tactics in real-time to maintain persistence within compromised environments. Additionally, AI-generated deepfakes and synthetic media are revolutionizing social engineering attacks, making them incredibly convincing and difficult to detect.

Automated reconnaissance tools can now scan millions of targets simultaneously, identifying vulnerabilities and crafting personalized attack vectors. These systems can analyze public information from social media, corporate websites, and data breaches to create highly targeted spear-phishing campaigns. For organizations focused on mastering OSINT for red teams, understanding these AI-driven reconnaissance capabilities becomes essential for comprehensive security assessments.

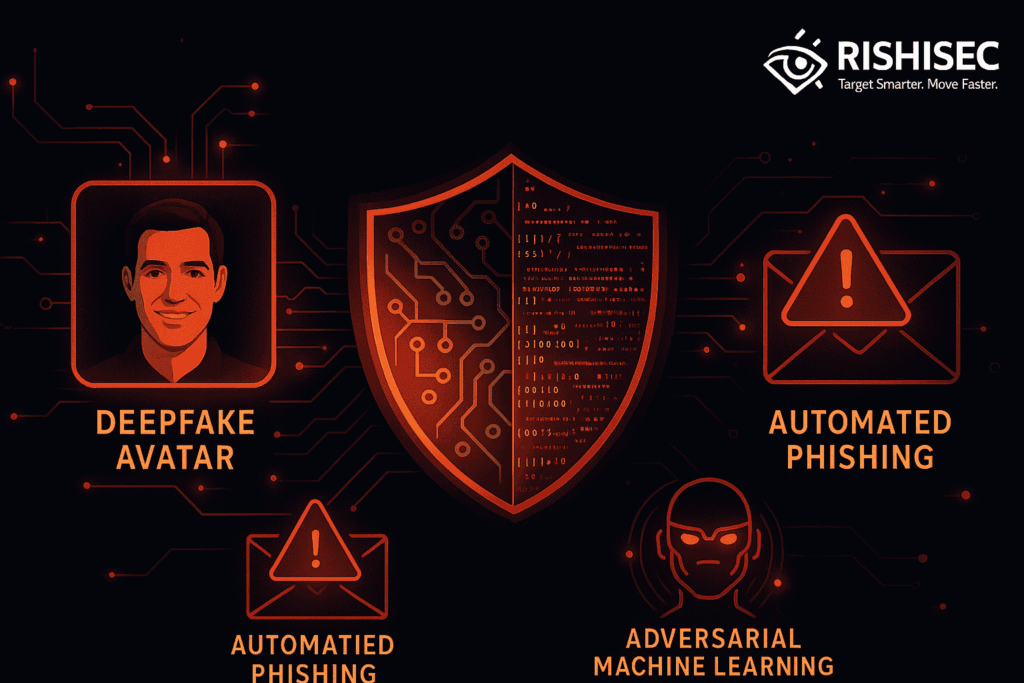

Emerging AI Threat Vectors

- Adversarial Machine Learning: Attacks designed to fool AI security systems by feeding them carefully crafted input data that causes misclassification or system failures.

- AI-Generated Phishing: Sophisticated phishing campaigns that use natural language generation to create personalized, contextually relevant messages that are difficult to distinguish from legitimate communications.

- Deepfake Social Engineering: Video and audio deepfakes used to impersonate executives, colleagues, or trusted individuals in business email compromise and wire fraud schemes.

- Automated Zero-Day Discovery: AI systems that can automatically discover and exploit previously unknown vulnerabilities faster than human researchers.

Strategic Implementation: Building Resilient AI-Enhanced Security

Successfully integrating AI into cybersecurity requires a strategic approach that acknowledges both its defensive potential and the adversarial risks it poses. Organizations must develop comprehensive frameworks that leverage AI capabilities while implementing safeguards against AI-powered threats.

The foundation of effective AI security implementation begins with robust data governance and model validation processes. Security teams must ensure that their AI systems are trained on high-quality, representative datasets and regularly updated to address evolving threat landscapes. This includes implementing explainable AI practices that provide transparency into decision-making processes, enabling security analysts to understand and validate AI-driven recommendations.

Integration with existing security infrastructure requires careful planning and phased deployment. Organizations should prioritize use cases where AI can deliver immediate value while building capabilities for more complex implementations. For law enforcement and government agencies, understanding essential intelligence frameworks becomes crucial for effective AI integration in their operational workflows.

Best Practices for AI Security Implementation

- Multi-layered Defense: Implement AI across multiple security layers rather than relying on a single AI solution, creating redundancy and comprehensive coverage.

- Human-AI Collaboration: Design systems that augment human expertise rather than replace it, ensuring analysts remain central to critical decision-making processes.

- Continuous Learning: Establish feedback loops that enable AI systems to learn from new threats and false positives, improving accuracy over time.

- Red Team Testing: Regularly test AI security systems against adversarial attacks to identify weaknesses and improve resilience.

The Future Landscape: Emerging Trends and Considerations

The evolution of AI in cybersecurity continues to accelerate, with emerging technologies promising both enhanced defensive capabilities and new adversarial challenges. Quantum computing developments will eventually render current encryption methods obsolete, requiring AI-powered post-quantum cryptography solutions. Similarly, the rise of edge computing and IoT devices creates new attack surfaces that require intelligent, distributed security approaches.

Regulatory frameworks are evolving to address AI governance in cybersecurity contexts. Organizations must stay informed about compliance requirements while implementing AI solutions that meet both security objectives and regulatory standards. This includes considerations around data privacy, algorithmic bias, and accountability in automated decision-making processes.

The integration of AI with zero-trust security architectures represents a significant trend in enterprise security. These systems can continuously verify user identities, device integrity, and application behavior, making access decisions based on real-time risk assessments. For organizations implementing comprehensive threat intelligence programs, understanding how OSINT integration enhances threat intelligence platforms becomes essential for maximizing AI-driven security investments.

Preparing for Tomorrow’s Challenges

- Skills Development: Invest in training programs that help security professionals understand AI capabilities and limitations, ensuring effective human-AI collaboration.

- Ethical AI Frameworks: Develop guidelines that address bias, fairness, and transparency in AI security systems, ensuring responsible implementation.

- Industry Collaboration: Participate in threat intelligence sharing initiatives that leverage collective AI insights to improve industry-wide security posture.

- Research Investment: Support ongoing research into adversarial AI defenses and novel attack detection methods.

Building Your AI Security Strategy

Creating an effective AI security strategy requires balancing innovation with proven security principles. Organizations should begin by conducting comprehensive assessments of their current security capabilities and identifying areas where AI can provide the greatest impact. This assessment should include evaluation of existing data sources, infrastructure capabilities, and staff expertise.

Successful implementation requires executive support and cross-functional collaboration between security, IT, and business teams. Organizations must establish clear metrics for measuring AI security effectiveness while ensuring that human oversight remains central to critical security decisions. Additionally, developing incident response procedures that account for AI system failures or adversarial attacks becomes essential for maintaining operational resilience.

| Implementation Phase | Key Activities | Success Metrics |

|---|---|---|

| Assessment & Planning | Current state analysis, use case identification, resource planning | Baseline security metrics, ROI projections |

| Pilot Deployment | Limited scope implementation, staff training, process development | Detection accuracy, false positive rates |

| Scale & Optimize | Full deployment, integration with existing tools, continuous improvement | MTTD/MTTR improvements, operational efficiency gains |

The journey toward AI-enhanced cybersecurity requires continuous learning and adaptation. As both defensive and adversarial AI capabilities evolve, organizations must remain agile in their approach while maintaining focus on fundamental security principles. Success depends on building systems that leverage AI’s strengths while mitigating its risks through thoughtful implementation and ongoing vigilance.

Want to strengthen your OSINT skills and enhance your AI security capabilities? Check out our OSINT courses for practical, hands-on training. Ready to experience AI-powered OSINT automation? Explore Kindi and see how it can transform your threat intelligence operations.

FAQ

How can organizations protect against AI-powered cyber attacks?

Organizations should implement multi-layered security approaches that include AI-aware detection systems, regular adversarial testing, and continuous monitoring for emerging AI threats. Additionally, maintaining human oversight in critical security decisions and investing in AI security training for staff are essential protective measures.

What are the main challenges in implementing AI for cybersecurity?

Key challenges include data quality and availability, integration with existing security tools, skills gaps among security professionals, and the risk of AI systems being targeted by adversarial attacks. Organizations must also address concerns about false positives and the explainability of AI-driven security decisions.

How do AI-powered threats differ from traditional cyber attacks?

AI-powered threats are more adaptive, scalable, and difficult to detect than traditional attacks. They can learn from defensive responses, automatically modify their tactics, and operate at machine speed across multiple targets simultaneously. These capabilities make them particularly challenging for conventional security measures to address effectively.

What role does human expertise play in AI-enhanced cybersecurity?

Human expertise remains critical for strategic decision-making, complex threat analysis, and providing context that AI systems cannot fully understand. Security professionals play a crucial role in validating AI recommendations, addressing novel threats, and ensuring that automated responses align with business objectives and regulatory requirements.

How often should AI security systems be updated and retrained?

AI security systems should be continuously updated with new threat intelligence and retrained regularly based on the evolving threat landscape. Most organizations implement monthly or quarterly retraining cycles, with more frequent updates for critical systems or during periods of high threat activity. Real-time learning capabilities enable some systems to adapt continuously to new threats.